Motion Capture

Hi, I'm a motion capture technician with experience in both technical and creative pipelines. I’ve been working with mocap systems since 2022, with hands-on experience at New York University Media Commons (2023–2024) as a Mocap Technical Assistant. From 2024 to 2025, I served as a Motion Capture Technician and Character Modeler at Woman Wizard.

I specialize in the complete motion capture pipeline, from setting up suits and capturing performance to retargeting in MotionBuilder and live streaming into Unreal Engine 5. I’ve worked as the sole mocap technician for several animation and virtual production projects, handling setup, cleanup, retargeting, and scene integration.

Opti Track

Rokoko

Mocap System

Blender

Maya

Unreal Engine 5

3D Software

Motive

Rokoko Studio

Motion Builder

Mocap Software

Rigging

Pipeline Design

Equipment Maintenance

Motion Capture Suit Setup

Calibration

Trouble Shoot

Retarget

Cleanup & Animation

Skill Set

Motion Capture related projects

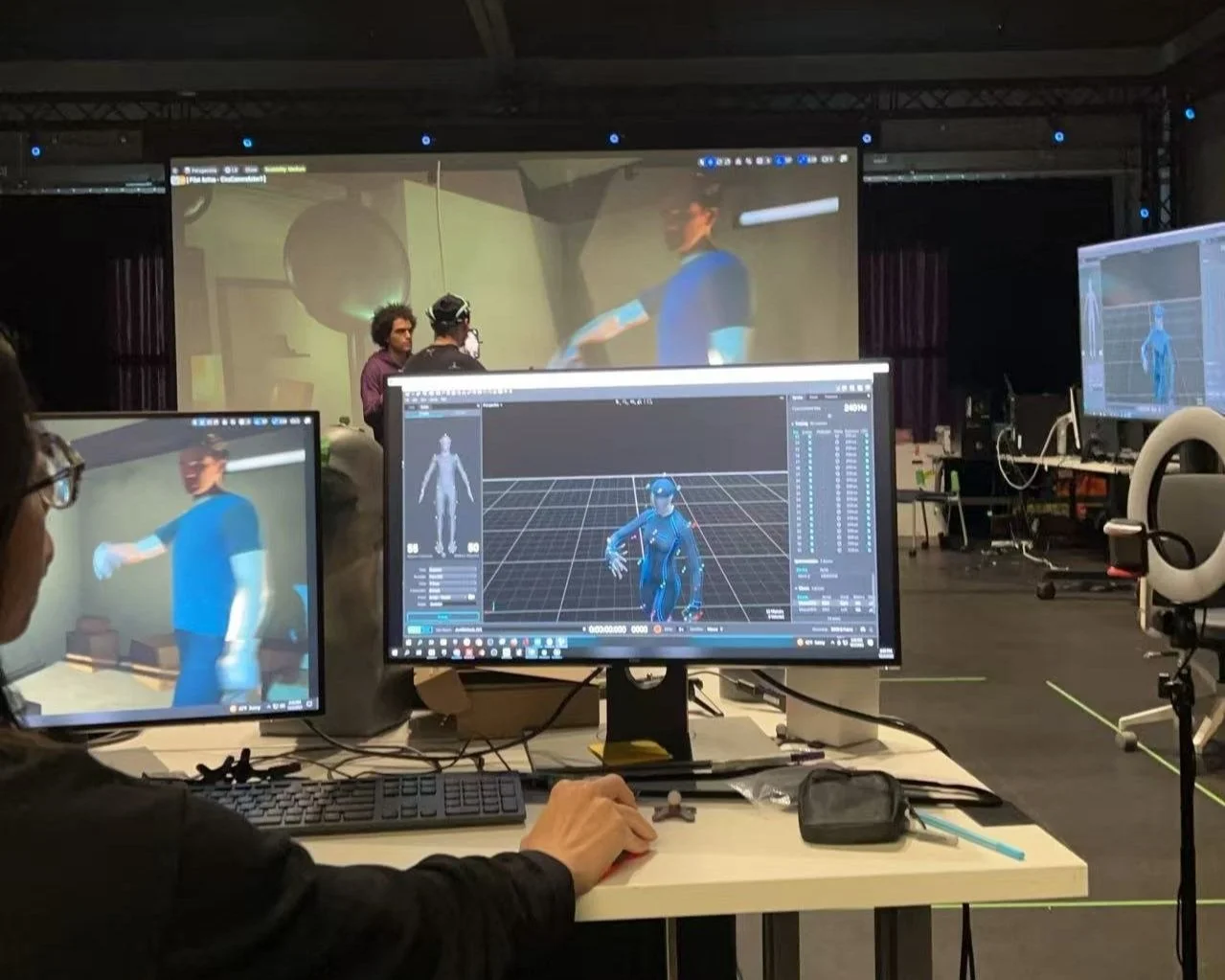

A selection of my motion capture-related projects, including rigging, characterization, and real-time streaming from tools like Motive and Rokoko Studio to Game Engine. I’ve worked across the full mocap pipeline—from character preparation to retargeting and live driving—in both game, animation production and virtual performance settings.

NYU Motion Capture Workshop - 2023

Role: Workshop Instructor, Content Creator

Studio: NYU Media Commons

Toolset: Blender, Unreal Engine 5, Motion Builder, After Effects

Contribution:

Designed and conducted a full workshop introducing character modeling, motion capture pipelines, and real-time animation with Unreal Engine’s Sequencer. Created all instructional files and demo scenes for teaching.

Ultra Lotus Mocap Film - 2024

Role: Creator, Director, Technical Artist

Studio: Graduate Thesis Project

Toolset: Blender, ZBrush, MotionBuilder, Motive, Unreal Engine 5, After Effects

Contribution:

Originated the concept and story. Fully responsible for character design, modeling, rigging, scriptwriting, storyboard, motion capture direction, animation cleanup and retargeting, environment setup in UE5, cinematic animation, and post-production edit.

Cable of Kingdom Parsifal - 2024 - 2025

Role: Character Modeler & Motion Capture Technician

Studio: Woman Wizard

Toolset: Blender, ZBrush, MotionBuilder, Rokoko Studio, Unreal Engine 5

Contribution:

I optimized and modeled the Parsifal character based on concept art by Nini Than, then rigged the mesh and implemented a facial ARKit–style morph system. Collaborated closely with animators, directors, and producers to refine motion requirements and ensure seamless pipeline integration —setting up Rokoko hardware, creating a live-stream link into UE5, and managing on‑set capture sessions. Post‑shoot, I maintained detailed motion file logs, cleaned up the raw data in MotionBuilder, and baked the polished animation back onto the character rig for seamless engine integration.

Omnia - 2025

Role: Character Modeler & Animation Artist

Studio: Collaborative Project

Toolset: Blender, MotionBuilder, Unreal Engine 5

Contribution:

For the Omnia VR game — a three‑person collaboration—I refined the base character model and added a custom control rig. Using MotionBuilder, I imported Mixamo’s animation library and tailored the motions to fit our rig, then optimized timing and weight adjustments for VR performance. Finally, I exported the cleaned and retargeted animations into UE5, ensuring smooth, low‑latency playback in a VR environment.

Motion Capture Process

Most of my works involves with motion capture. And this is my typical work flow.

Rigging

Characterize

File Naming Convension

Capture Takes

Retarget

Clean up

1.Rigging

Before motion capture, I build a custom rig for each character based on the project’s animation needs. For stylized or anime-style characters, I often prepare facial morph targets (blendshapes) for use in facial capture or in-engine animation.

I organize the rig into clean, layered bone structures:

Deformation Bones ( DEF - )

Mechanic Bones ( Meh - )

Control Bones ( Ctl - )

Face Rigging

For the face, I create shape keys covering a wide range of expressions, which support both facial mocap (e.g. ARKit) and hand-keyed animation.

Anime Style Characters Face Setup

“Little Monster” - Blender - Morph Targets

Fantascy Film Style Characters Face Setup

“Parsifal” - Blender,Zbrush - Blendshape-based live facial capture test

2.Characterize

I use MotionBuilder for the retargeting process. Sometimes characters are streamed live from Motive or Rokoko Studio directly into the game engine. To avoid any unexpected issues during live sessions, I always characterize the character in MotionBuilder beforehand. This includes setting up the Control Rig properly and verifying that all joints and controllers respond correctly. Characterizing in advance ensures that the skeleton behaves predictably and reduces the risk of errors during real-time capture or retargeting.

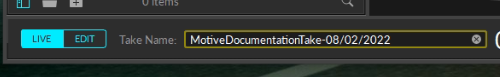

Before any mocap shoot, I create a detailed plan of the takes to be recorded, including action types, characters involved, and the purpose of each take (e.g. loop, cinematic, facial-only, etc.). A consistent file naming system is essential for efficient tracking, retargeting, and reuse in game and post-production.

3.File Naming Convension

When I was capturing takes, each mocap session begins with an action breakdown document that outlines all required movements per character. This helps optimize shoot time and avoid missing critical actions.

Pre Shooting Action Planning

My Format Example:

[Project]_[Character]_[Action]_[Take#]

Sample Planning Sheet:

Character

Action

Notes

Parsifal

Facial Idle

ARKit facial only

Walk Cycle

Lotus

Loop, Slow

Full body and facial

Parsifal

Angry Dialogue

4.Capture Takes

Before recording any motion capture session, I carefully prepare all the necessary equipment and calibrate the system to ensure high-precision data. My workflow is designed to be clean, efficient, and repeatable.

“Ultra Lotus” - Opti Track Motive

Performer: Link, Josh, Zhuoyuan

1. Equipment Setup

Mocap Equipments - NYU Media Commons

I begin by checking and setting up all essential mocap gear:

Motive dongle

Mocap suits (Rokoko or OptiTrack-compatible)

Reflective markers for each suit, placed according to standard skeleton configuration

Two calibration wands for system calibration

Rigid bodies for props or special tracked objects

2. Calibration

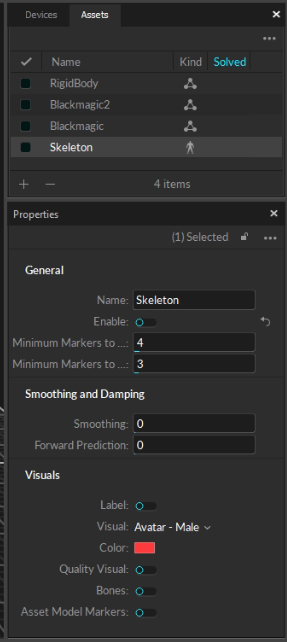

Motive - NYU Media Commons

Once setup is complete, I launch Motive and begin the calibration process. To ensure high accuracy, I aim to keep each camera’s sample rate above 7000, which significantly improves tracking stability and motion fidelity.

3. Character Setup

After calibration:

The performer puts on the mocap suit.

I apply markers based on the standard human skeleton setup in Motive.

I create a Character asset inside Motive to track and label the performer’s data.

Motive - NYU Media Commons

4.Take Recording

Motive - NYU Media Commons

With the script and shot list prepared in advance, I follow a structured take-by-take capture process. Each motion is recorded in the correct order, according to the planned naming convention and performance requirements.

This streamlined pipeline ensures clean, well-organized motion data ready for retargeting and animation.

5.Retarget

Once the mocap data has been recorded and cleaned, I move into the retargeting stage using Autodesk MotionBuilder.

“Ultra Lotus” - Motion Bilder, Unreal Engine 5 - Mocap Cleanup

Import FBX

I import the recorded FBX files from Motive into MotionBuilder, which contains the raw skeleton animation captured during the session.Character Setup

I load the pre-characterized FBX file and make sure it’s in standard T-pose.Retargeting

I map the source skeleton to the target character rig. This ensures the captured motion drives the custom model accurately.Bake to Skeleton

Once the motion looks clean and correctly mapped, I bake the animation directly onto the character’s skeleton for export and further use in engines like Unreal Engine.

This process guarantees that motion data is clean, consistent, and production-ready, fully compatible with animation pipelines for games, films, or real-time projects.

Workflow Overview:

6.Clean Up

After retargeting, I carefully clean up the animation to ensure it’s smooth, natural, and production-ready.

“Ultra Lotus” - Motion Bilder - Mocap Cleanup

To reduce jittering or noise in the raw mocap data, I apply MotionBuilder’s built-in filters:

Smooth Filter

Butterworth Filter

These help preserve motion fidelity while eliminating unwanted micro-movements.

If the results are still not satisfactory, I manually adjust the F-Curves on specific joints for finer control.